With 44% of tech spend going to waste because systems are too complex to use, AI agents that actually reduce cognitive load—not add to it—are the key to finally getting ROI from your technology investments.

Modern organisations invest heavily in technology, yet many fail to fully leverage the solutions they purchase or develop. According to the ADAPT CFO Edge Survey (Nov 2024), only 46% of organisations utilise between 60–80% of their technology capabilities, leaving a significant portion underused. This underutilisation not only wastes resources but also underscores the challenges of excessive complexity, high cognitive load, and constant context switching in the workplace.

In response, 50% of CIOs in Australia are planning to boost their AI budgets this year—a clear sign that leaders recognise the need for more efficient, integrated solutions. To capitalise on these investments, AI agents must be proactively designed to simplify decision-making and reduce mental overhead.

The State of Technology & Adoption

1- Under-utilised Tech Investments

Wasted Spend: CIOs estimate that 44% of money spent on tech capabilities is wasted (ADAPT CFO Edge Survey, 2024). Much of this waste comes from solutions that staff find too complex or cumbersome to learn.

Partial Utilisation: About 25% of organisations believe they’re only using 40–60% of their current technology. Many mature businesses (over five years old) often juggle 100+ applications in their day-to-day operations.

Supporting Reference: Okta’s Business at Work 2022 report found that large enterprises manage an average of 187 applications. This figure tends to be even higher for organisations that have grown through acquisitions or inherited multiple legacy systems. Okta Business at Work 2022

2- User Resistance and Training Gaps

Rapid advances in generative AI have spurred a proliferation of single-purpose tools. Some market analyses estimate that nearly 45% of new AI solutions introduced in 2024 are designed for niche tasks. While these specialised applications can solve specific problems, their disjointed nature compounds complexity, making it harder for organisations to integrate AI solutions seamlessly and fully leverage their technology investments.

Source: ADAPT CFO Edge Survey, 2024

3- The Flood of Single-Use AI Tools

Rapid advances in generative AI have spurred a proliferation of single-purpose tools. Some market analyses estimate that nearly 45% of new AI solutions introduced in 2024 are designed for niche tasks. While these specialised applications can solve specific problems, their disjointed nature compounds complexity, making it harder for businesses to integrate AI solutions seamlessly and fully leverage their technology investments.

Cognitive Load Theory in the Workplace

What is Cognitive Load Theory?

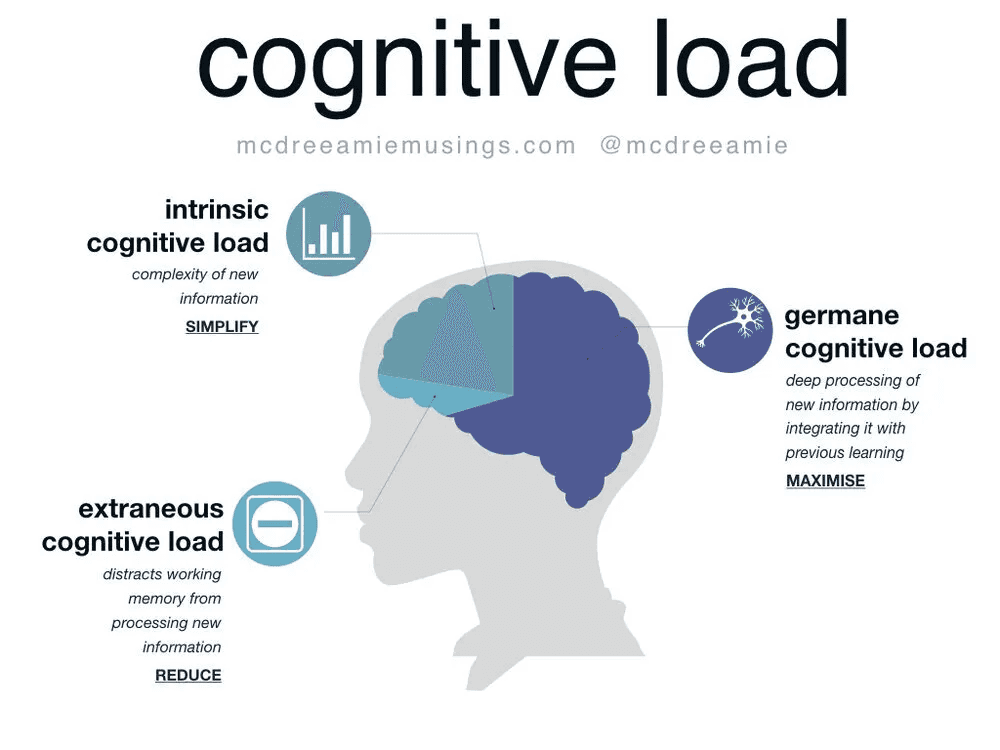

Cognitive load theory (Sweller, 1988) explains that our mental capacity is limited. Overly complex software interfaces and fragmented workflows can quickly overwhelm staff. Key concepts include:

Intrinsic Load: The inherent complexity of a task (for example, analysing financial statements). Extraneous Load: The extra burden from poorly designed tools or irrelevant features. Germane Load: The mental effort devoted to learning and mastering new skills – which well-designed AI solutions can help manage. |  |

When organisations overlook these principles, technology adoption suffers, and staff tend to fall back on manual or familiar processes—ultimately wasting both the tech investment and valuable time.

The Role of Cognitive Load and Context Switching

Cognitive Load: In psychological terms, cognitive load is the mental effort required to learn, process, or use information. When staff are overwhelmed, they’re less likely to adopt new tools effectively.

Context Switching: Constantly toggling between apps, dashboards, and data sources drains mental energy and disrupts workflow. Research from the University of California, Irvine, suggests it can take over 20 minutes to regain focus after each interruption.

Impact on Deep Work: When employees aren’t constantly switching contexts, they can devote more time to deep-focus tasks – essential for creative problem-solving, strategic thinking, and driving innovation.

How AI Agents Can Help

AI agents that integrate multiple systems into a single, intelligent layer can significantly reduce the need to bounce between different applications. By handling repetitive tasks automatically and surfacing only the most relevant information, these agents help staff maintain focus and lower their cognitive load.

However, their success lies in ensuring that both the interface and interaction design are thoughtful, user-centric, and seamlessly integrated into existing workflows—so that users feel confident, supported, and in control of the technology.

Designing AI Agents for Reduced Mental Overhead

Below are four key design strategies that AI agents can use to help reduce cognitive load.

1- Integration with Existing Workflows

By embedding AI Agents seamlessly into the tools and processes employees already use, they reduce the need for learning new systems or switching between applications.

In this context, AI is positioned Al as a natural extension of current platforms to help employees stay within familiar environments, thereby lowering the barrier to adoption while seamlessly enhancing efficiency. This ensures that Al can augment, rather than disrupt, existing work routines, making it more intuitive and valuable for users.

The Single Pane of Glass Concept

A “single pane of glass” is a user-centric design approach that consolidates multiple systems or data sources into one unified area. When users must constantly switch contexts or mentally piece together information from various tools, it increases extraneous cognitive load—the mental effort required to navigate cluttered or disjointed interfaces. By providing a single, integrated view, you reduce the number of steps needed to complete tasks, lower the mental overhead of recalling which app to open next, and keep users focused on decision-making rather than busywork.

This streamlined experience frees up mental capacity (germane load) for deeper, value-adding tasks instead of routine, repetitive navigation.

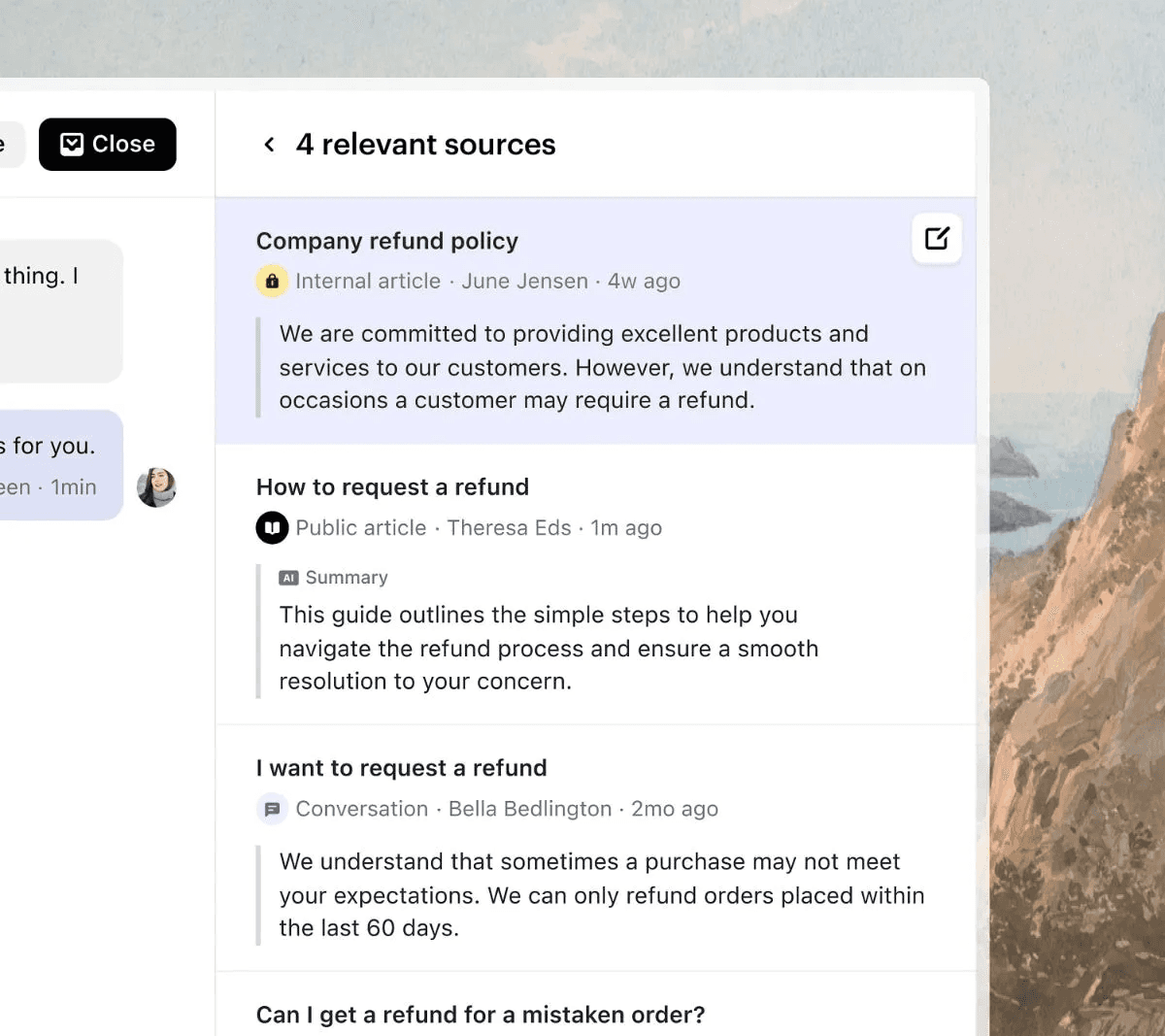

💼 Case Study: Intercom’s Fin AI

Intercom’s recent release of Fin AI, a secondary chat that searches internal knowledge to surface answers to customer queries faster, has prove to close an additional 31% of customer conversations each day when compared to non-use, and response rates to customers has increased by ~71%.

It reduces cognitive load by utilising context-aware assistance in familiar environment, making adoption straightforward.

Source: Intercom (2024)

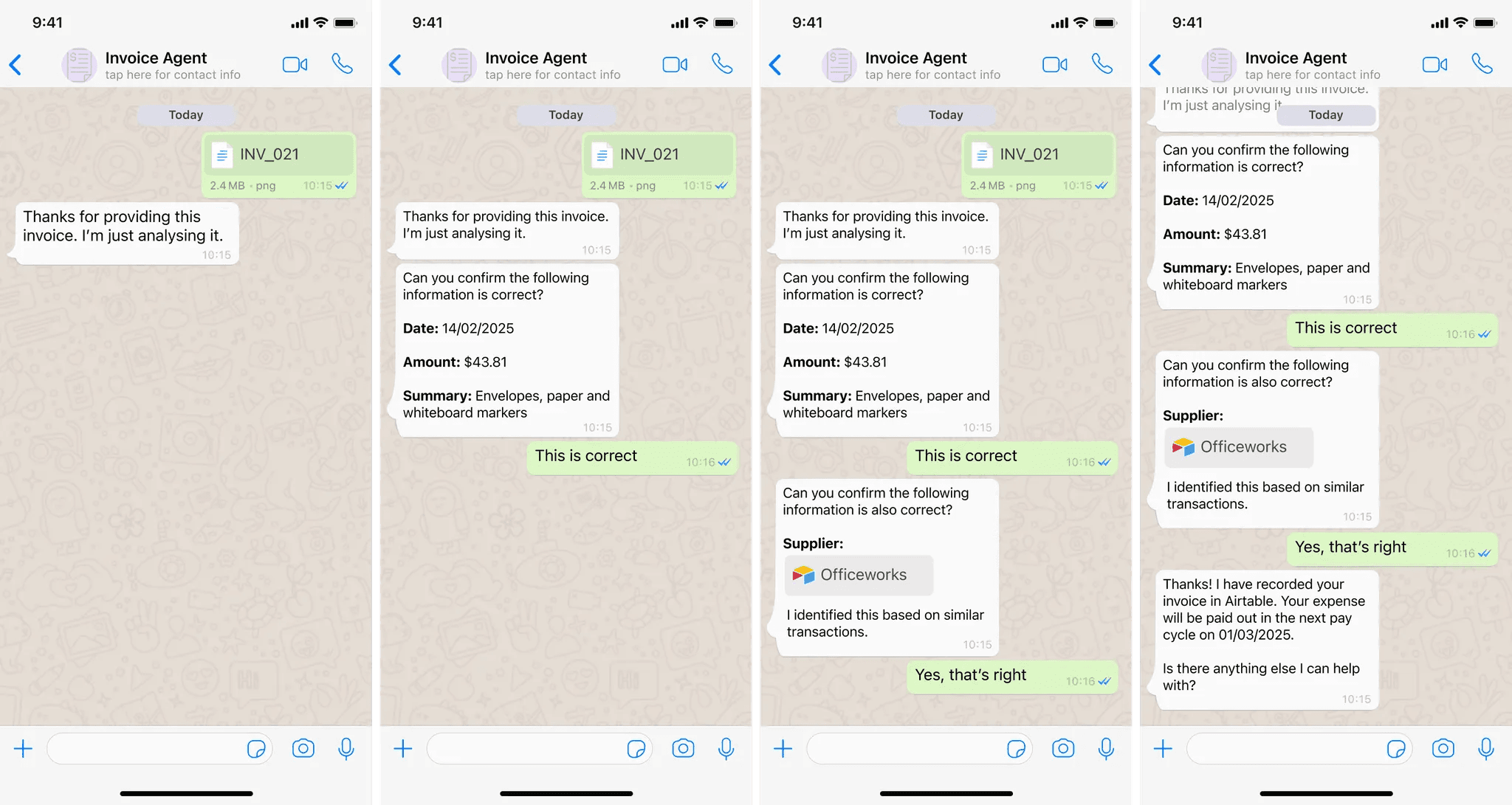

2- Context-Aware Assistance

When using context-aware assistance, AI agents leverage information about the user’s current situation—such as location, time of day, or recent activity—to offer timely, relevant support. This design principle ensures that interactions are both efficient and personalised, minimising unnecessary interruptions while providing targeted help exactly when it's needed.

Context-Aware Assistance can handle the inherent complexity of data retrieval by proactively surfacing only the essential metrics or information for the user’s task.

💼 Case Study: Morgan Stanley’s AI Assistant for Financial Advisors

In the wealth management division of Morgan Stanley, a GPT-4 powered AI chatbot has been deployed to help financial advisors instantly answer client questions by searching internal knowledge bases. Instead of manually digging through reports or research (which could take many minutes or require a callback), advisors can now ask the AI assistant during the call and get answers in 15–20 second. One advisor noted he keeps the AI “open on his computer his entire workday” and it has become like a “partner” in client calls. This AI agent has been rolled out to ~16,000 advisors, with over 98% adoption across teams, reflecting its usefulness.

The agent saves significant time and cognitive effort, allowing advisors to focus more on the client conversation rather than searching for information.

This example shows how an AI agent can streamline decision-making in finance by aggregating data from many documents and delivering just-in-time answers, reducing the cognitive load on employees (and improving client service).

Source: Business Insider (2024)

3- Adaptive Notifications

Using adaptive notifications involves aggregating and prioritising alerts based on user behaviour and context, so users are only interrupted when truly necessary. By filtering out non-critical alerts and delivering a concise summary when appropriate, this approach reduces context switching and notification fatigue, and helps maintain focus for longer periods of time.

Adaptive notifications can reduce extraneous load by removing unnecessary mental overhead (reducing the need to juggle multiple tools or be bombarded by irrelevant alerts)

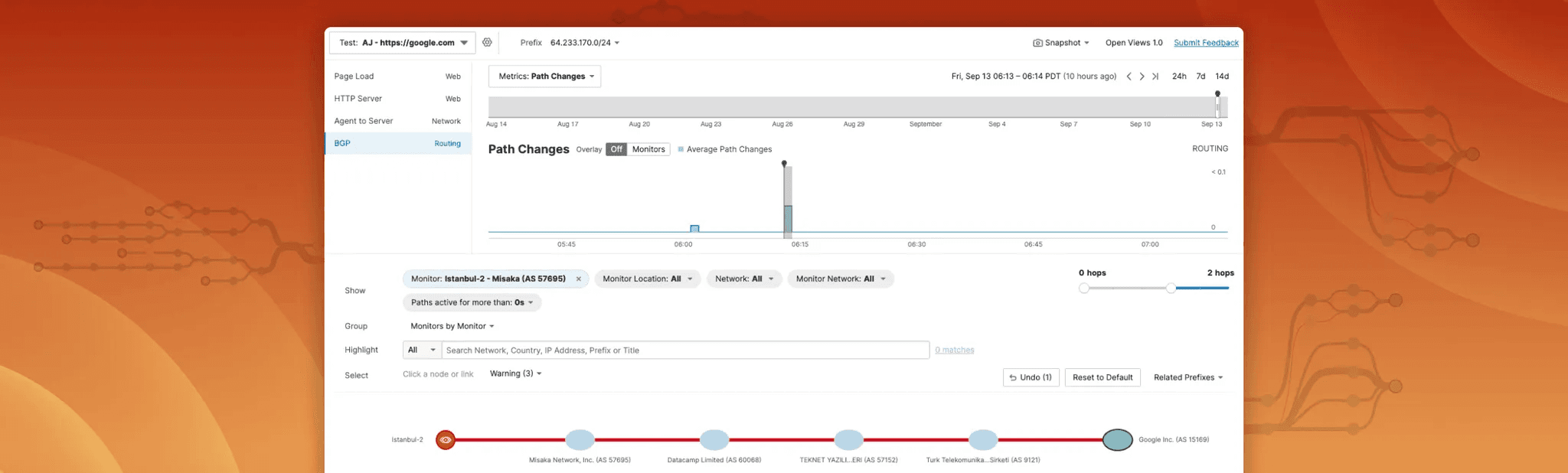

💼 Case Study: Cisco ThousandEyes Adaptive Alert Detection

In a bid to reduce alert fatigue and enhance operational efficiency, Cisco ThousandEyes has introduced Adaptive Alert Detection, a machine learning–powered system that refines alert generation based on real-time network conditions.

Instead of overwhelming IT teams with a flood of notifications, the system aggregates alerts and dynamically adjusts thresholds to display only the most actionable items. Early adopters report that this adaptive approach cuts false positives by nearly half, enabling teams to focus on resolving genuine issues swiftly. This example illustrates how adaptive notifications can streamline decision-making by filtering out noise and reducing cognitive overload, ultimately boosting productivity and improving service reliability.

Progressive Disclosure

Digital products that utilise progressive disclosure reveal more advanced features or detailed information gradually as the user needs them. This design principle prevents overwhelming users at first glance, enabling a clean, simple interface that progressively unfolds additional layers of complexity only when required.

The progressive disclosure principle fosters deeper understanding of a task or topic at a comfortable pace, thereby turning some of the mental effort into beneficial, germane load that aids skill development

Visualised Cues & Simplified Interfaces

When rolling out an AI agent, the interface should chunk tasks in short steps. This aligns with the principle of reducing extraneous cognitive load by preventing staff from confronting all functionalities at once. To achieve this, ensure that agent experiences have clear visual hierarchy, chunking of information, and minimal text instructions.

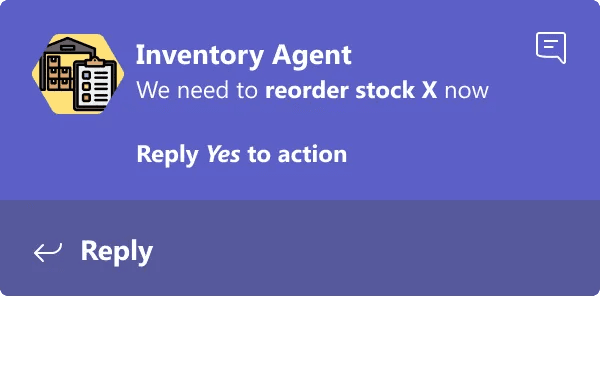

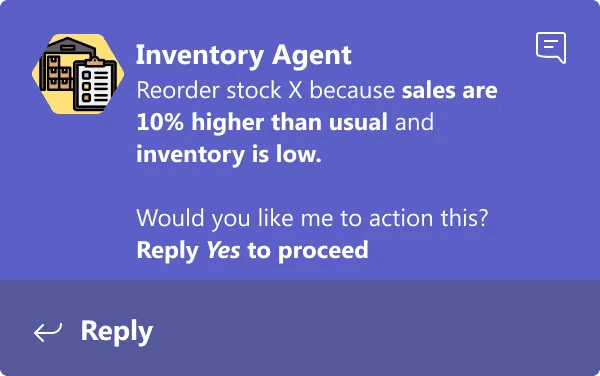

Example: This example shows that requests for human input are progressively disclosed to reduce confusion and cognitive effort when responding.

5- Addressing Cognitive Load and Context Switching

Effective AI agents must reduce both the intrinsic and extraneous load on staff while minimising the mental strain of constant context switching. By consolidating information within a single interface and filtering out irrelevant alerts, organisations can cut down on the time employees spend toggling between multiple applications.

This approach to AI agents not only streamlines tasks but also supports deeper, more focused work—a key driver of innovation and productivity.

Best Practices for Implementation

Below are some practical, step-by-step tips for each recommended practice.

1. Start with User Research

Key Steps:

Run surveys and conduct interviews to pinpoint staff pain points.

Map current workflows to identify redundant steps and frequent context switches.

Create user personas that focus on specific tasks, motivations, and challenges.

Example Questions:

“Which tools do you use every day, and what’s your biggest frustration with them?”

“If you had a magic wand, what 3 weekly tasks would you want to automate?”

“What decisions do you have to make and what information do you need to make them?”

2. Iterative Design and Testing

Continuous iteration ensures the AI solution remains user-friendly and effective, and focusing on delivering value to users sooner-than-later. Insights gained in user research will often determine which core features should be delivered first by highlighting frequent and common pain points.

Testing and gathering user feedback can highlight any lingering friction points where employees still switch apps to accomplish tasks the AI agent was supposed to handle.

Iterative delivery of AI agents includes:

Building a minimum viable product (MVP) featuring core functionalities.

Testing with a small group via pilot programmes or sandbox environments.

Regularly gathering feedback and tweak the design to reduce complexity.

3. Transparent Communication

Key Steps:

Clearly explain what the AI agent can and cannot do.

Provide short, role-specific training modules (think 5-minute micro-learnings).

Offer ongoing Q&A sessions or drop-in office hours for extra support.

Best Learning Methods:

Micro-learning: Quick, focused lessons that staff can complete during breaks. Micro-learning reduces extraneous load by focusing on one skill at a time, making it easier for staff to internalise new agent-driven processes without feeling overwhelmed.

Peer-to-Peer Sessions: Encourages a sense of community and shared learning.

Interactive Workshops: Hands-on sessions where employees try out AI features in real time.

4. Combine AI Agents with Human Oversight

Behavioural economics also examines how people might trust or doubt AI outputs. Combining AI Agents with Human Oversight helps address biases in trusting or doubting AI outputs. Some users may blindly trust algorithmic recommendations (automation bias), while others may be overly skeptical (algorithm aversion).

Well designed AI agents provide guidance and smart defaults, but will allow the human to override – this balances efficiency with the human need for control. Similarly, if the AI agent’s interface is conversational and friendly, it reduces intimidation and encourages usage.

By ensuring humans remain in the loop for critical decisions and providing transparent, brief rationales, organisations can balance efficiency with sound human judgment.

🌈 Example: Using Human Oversight for an Inventory AI Agent

Without rationale

With rationale and friendly tone

5. Training and Change Management

Enabling effective implementation of AI agents in a business context requires well-constructed onboarding processes, real-time assistance and transparency of of gains.

Key Steps:

Involve end-users in the design process (particularly effective for AI agents conducting internal tasks).

Appoint champions or super-users to mentor their colleagues.

Use gamification (like achievement badges) to encourage exploration of AI features.

Set up real-time support channels (e.g. via Slack or Teams) for immediate help.

Actively communicate out the gains made by AI Agents to demonstrate value and encourage use.

6. Enhance Productivity with Focus Modes

When implementing AI agents, ensure that you are not adding to employee’s cognitive load with additional notifications or messages. Utilise platform features or employee policies that enable staff to set ‘focus modes’ or schedule times for uninterrupted deep work. During these periods, agents can queue non-urgent notifications for a designated break time, minimising context switching.

Conclusion

Reducing cognitive load isn’t just a nice-to-have—it’s a strategic necessity for organisations looking to maximise the ROI of their tech investments. As multiple surveys and reports show, underutilisation often stems from overwhelming complexity and constant context switching. By consolidating workflows, adapting to user behaviour, and using principles like progressive disclosure, AI agents can free up staff to focus on the work that really matters.

Remember: The aim is to enhance existing habits and centralise capabilities, not to add another layer of disconnected tools. This approach not only boosts utilisation rates but also paves the way for real innovation, creativity, and overall employee satisfaction.

Acknowledgement

This whitepaper was co-created with the assistance of AI-based research and writing tools, combined with human oversight and editorial input.

References & Further Reading

ADAPT CIO Edge Survey (Feb 2025) – Link

Okta (2022). Business at Work 2022. Link

Sweller, J. (1988). Cognitive load during problem solving: Effects on learning. Cognitive Science, 12(2), 257–285.

University of California, Irvine (2015). Study on Workplace Context Switching. Link

Statista (2024). Average number of SaaS apps used in the U.S. 2024. Link

Business Insider (2024). Morgan Stanley is betting on AI to free up advisors' time to be 'more human.' Nearly 100% of advisor teams use it, and here's how. Link

Intercom (2024). Introducing Intercom’s AI Copilot. Link

Let’s keep in touch.

Discover more about AI. Follow me on LinkedIn.